It is well known that Linux implemented four types of I/O schedulers (noop/deadline/anticipatory/cfq) in Linux kernel 2.6.10. The default is cfq in most distributions including RHEL, which is not so good then noop normally outperforms, but I'll talk this in other posts.

Linux I/O scheduler also has a functionality to sort incoming I/O requests in its request-queue for optimization. Queue size is configurable. Queue size is defined as "/sys/block/sdX/queue/nr_requests" then you can change queue length as follows.

Changing queue size is even effective for noop scheduler.

# cat /sys/block/sda/queue/nr_requests

128

# echo 100000 > /sys/block/sda/queue/nr_requests

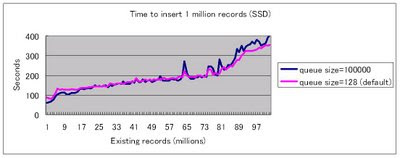

Here are benchmarking results about changing i/o scheduler queue size for MyISAM insert-intensive loads. Detailed are written in the slides at the UC.

Apparently increasing queue size was very helpful for HDD, but not helpful for SSD.

Let me explain about backgrounds.

On Linux side, I/O requests are handled by the following order:

system calls(pwrite/etc)

-> Filesystem

-> I/O scheduler

-> Device Driver/Disks

I/O scheduler sorts incoming I/O requests by logical block addresses, then sending them to a device driver.

I/O scheduler does not depend on storage devices so is helpful for some parts (i.e. minimizing disk seek overheads), not helpful for other parts (i.e. minimizing disk rotation overheads).

On the other hand, Command Queuing (TCQ/NCQ) also sorts I/O requests for optimization. SAS and recent SATA II disks support command queuing. The goal is partly duplicate from I/O scheduler. But TCQ can minimize not only disk seeks but also disk rotation overhead (See the link to wikipedia). The disadvantage of TCQ is that queue size is very limited (normally 32-64).

Based on the above, sorting almost all random I/O requests on I/O sheculer then sending them to TCQ would be nice.

Suppose 100,000 random read I/O requests are coming.

When I/O scheduler queue size is 128 (default in many cases), TCQ gets I/O requests by almost random order, so pretty high disk seek overhead happens for each action (requests within single queue is dispersed).

When I/O scheduler queue size is 100,000, TCQ gets I/O requests by fully sorted order, so seek overhead can be much smaller.

Increasing queue size does not have any effect on SSD because no disk seek happens.

This would explain my benchmarking results.

I/O scheduler queue size settings is not helpful for InnoDB because InnoDB internally sorts I/O requests to optimize disk seek overheads, and sending limited number of i/o requests controlled by InnoDB internal i/o threads. So the role is duplicate between InnoDB itself and I/O scheduler queue. Note that TCQ improves InnoDB throughput because disk rotation overheads are significantly reduced and such optimizations can not be done from application/kernel side.

MyISAM does nothing special (highly depending on OS) so this helps.

Updated in Apr 30: Added detailed benchmark conditions for people who are interested..

Here is a test script. I ran a single-threaded stored procedure on a same machine.

create table aa (id int auto_increment primary key,

b1 int,

b2 int,

b3 int,

c varchar(100),

index(b1), index(b2), index(b3)) engine=myisam;

drop procedure sp_aa;

delimiter //

create procedure sp_aa(IN count INTEGER)

BEGIN

DECLARE time_a, time_b BIGINT DEFAULT 0;

DECLARE done INTEGER DEFAULT 0;

DECLARE i INTEGER DEFAULT 1;

WHILE done != 1 DO

insert into aa values (i,rand()*count,rand()*count,rand()*count,repeat(1,40));

SET i = i + 1;

IF i % 1000000 = 1 THEN

SELECT unix_timestamp() into time_a from dual;

SELECT i, from_unixtime(time_a), time_a - time_b from dual;

SET time_b = time_a;

END IF;

IF i > count THEN

SET done = 1;

END IF;

END WHILE;

END;

//

delimiter ;

mysql test -vvv -e "call sp_aa(300000000)"

Then wait for a long long time...

# Default insert. no insert-delayed, no disable-keys, no delay-key-write, no mmap

H/W, OS, MySQL settings

Sun Fire X4150

CPU: Intel Xeon, 8 cores

RAM: 32GB (but limit filesystem cache size up to 5GB, no swapping happened)

HDD: SAS 15,000RPM, 2 disks, RAID 1, write cache enabled

SSD: Intel X25-E, Single drive, write cache enabled

OS: RedHat Enterprise Linux 5.3 (2.6.18-128.el5)

Filesystem: ext3

I/O Scheduler: deadline

MySQL 5.1.33

key_buffer_size: 2G

i/o stats:

queue size=128 (default)

avg-cpu: %user %nice %system %iowait %steal %idle

0.01 0.00 0.08 24.69 0.00 75.22

Device: rrqm/s wrqm/s r/s w/s rMB/s

sdb 0.00 0.87 0.00 575.60 0.00

wMB/s avgrq-sz avgqu-sz await svctm %util

2.25 8.01 142.44 247.03 1.74 100.00

(At running 12 hours, 13 mil rows were inserted)

queue size=100000

avg-cpu: %user %nice %system %iowait %steal %idle

2.06 0.00 5.32 29.66 0.00 62.96

Device: rrqm/s wrqm/s r/s w/s rMB/s

sdb 0.00 2487.33 0.00 2042.60 0.00

wMB/s avgrq-sz avgqu-sz await svctm %util

35.11 35.20 84402.36 43582.33 0.49 100.01

(At running 1.5 hours, 41 mil rows were inserted)

...

avg-cpu: %user %nice %system %iowait %steal %idle

0.07 0.00 0.19 24.82 0.00 74.91

Device: rrqm/s wrqm/s r/s w/s rMB/s

sdb 0.00 9.03 1.70 756.03 0.01

wMB/s avgrq-sz avgqu-sz await svctm %util

5.56 15.04 31981.72 127560.45 1.32 100.00

(At running 12 hours, 77 mil rows were inserted,

index size was 4.5 GB)

If running many more hours(days) so that index size exceeds filesystem cache, a lot of disk reads will happen. (I didn't have time to run that test before UC)