I recently did a disk bound DBT-2 benchmarking on SSD/HDD (MySQL 5.4.0, InnoDB). Now I'm pretty confident that storing tables on SSD, redo/Binlog/SYSTEM-tablespace on HDD will be one of the best practices for the time being.

This post is a detailed benchmarking report.

(This post is very long and focusing on InnoDB only. If you are familiar with HDD/SSD/InnoDB architecture and understand what my blog title means, skipping section 1 (general theory) then reading from section 2 (benchmarking results) would be fine. )

1. General Theory of HDD, SSD and InnoDBSSD is often called as a disruptive storage technology. Currently storage capacity is much smaller and unit price is much higher than HDD, but the situation is very rapidly changing. In the near future many people will use SSD instead of HDD.

From DBA's standpoint, you have a couple of choices for storage allocation.

- Storing all files on SSD, not using HDD at all

- Storing all files on HDD, not using SSD at all

- Using SSD and HDD altogether (some files on SSD, others on HDD).

Which is the best approach? My favorite approach is storing tables on SSD, storing Redo Log files, Binary Log files, and SYSTEM-tablespace(ibdata) on HDD. I describe a detailed reason and some DBT-2 benchmarking results below.

1.1 HDD is very good at sequential writes if write cache is enabledUsing battery backed up write cache(BBWC) is one of the best practices for RDBMS world. BBWC is normally equipped with hardware raid controller. Normally InnoDB flushes data to disks (redo log files) per each commit to guarantee durability. You can change this behavior by changing innodb_flush_log_at_trx_commit parameter to 0 or 2, but default setting (1) is recommended if you need durability.

Without write cache, flushing to disks require disk rotation and disk seek. Redo logs are sequentially written, so disk seek doesn’t happen when the disk is dedicated for redo log files, but disk rotation still happens. Average disk rotation overhead is 1/2 round. Theoretical maximum throughput is only 500 flushes per second when using single 15000RPM drive (15,000 * 2 / 60 seconds = 500).

If using write cache, the situation is greatly improved. Flushing to write cache does not require disk seek/rotation so finishes very quickly. The data in write cache will be written to disk with optimal order (i.e. writing many data at one time), so total throughput is highly improved. Over 10,000 fsync() per second is not impossible.

Sometimes people say that write cache is not effective because the total write volume is the same. This is not correct. On HDD, disk seek & rotation overhead is huge. By using write cache, internally a lot of write operations are converted into small number of write operations. Then the total number of disk seeks & rotations can be much smaller. For random-write oriented files (i.e. index files), disk seeks & rotations still happen (but reduced so still very effective) , but for sequential-write oriented files (i.e. REDO log files) write cache is very effective.

Write cache needs to be protected by battery in order not to be invalidated by power failure etc.

Using H/W RAID + BBWC + HDD is used for years and now it’s a proven technology. You can setup with pretty reasonable cost.

Note that HDD/SSD storage devices also have write cache themselves, but don't turn on. It's very dangerous because it's not protected by battery so data in cache is destroyed by power failure etc.

1.2 SSD is very good at random reads, good at random writes, not so good at sequential writes, compared to HDDCurrently read/write performance on SSD highly depends on products and device drivers. SSD is very fast for random reads. I could get over 5,000 *random reads* on single Intel X25-E SSD drive. For HDD, normally only a few hundreads of random reads is possible. Over 10 times difference is huge.

For sequential reads, performance difference is smaller but there is still a significant difference on some SSDs(When I tested, single X25-E drive was two times faster than two SAS 15,000RPM RAID1 drives).

For writes, I heard both positive and negative information. I tested Intel X25-E SSD then it worked very well (I got over 2,000 random writes on single drive with write cache). But I heard from many people who tested different SSDs that some SSDs don't perform well for writes. Some cases it was much slower than HDD, some cases write performance gradually dropped after a few months, some cases it freezed for a while. You would also be concerned with a "Write Endurance" issue on SSD when running on production environments.

On HDD, there is a huge difference between random writes and sequential writes. Sequential writes is very fast on HDD with write cache. On the other hand, on SSD, there is not so much difference between random writes and sequential writes. Though performance highly depends on SSD drives themselves, you probably notice that sequential write performance is not so different between HDD and SSD (or even faster on HDD). Since HDD has a longer history and is cheaper than SSD, currently there is not a strong reason to use SSD for sequential write oriented files.

1.3 MySQL/InnoDB files From these perspectives, it would make sense to locate random i/o oriented files on SSD, sequential write oriented files on HDD. Let's classify MySQL/InnoDB files as follows.

Random i/o oriented:

- Table files (*.ibd)

- UNDO segments (ibdata)

Sequential write oriented:

- REDO log files (ib_logfile*)

- Binary log files (binlog.XXXXXX)

- Doublewrite buffer (ibdata)

- Insert buffer (ibdata)

- Slow query logs, error logs, general query logs, etc

By default, table files (*.ibd) are not created but included in InnoDB's SYSTEM-tablespace(ibdata). By using "innodb_file_per_table" parameter, *.ibd files are created then table/index data are stored there. Table files are of course randomly read/written so storing on SSD is better. Note that write cache is also very effective on SSD so using H/W raid with BBWC + SSD would be nice.

REDO log files and Binary log files are transactional logs. They are sequentially written so you can get very good performance on HDD with write cache. Sequential disk read will happen on recovery, but normally this will not cause a performance problem because log file size is normally much smaller than data files and sequential reads are much faster than random reads (happening on data files).

Doublewrite buffer is a special feature for InnoDB. InnoDB first writes flushed pages to the "doublewrite buffer", then writing the pages to their correct positions on data files. This is to avoid page corruption (Without doublewrite buffer, page might get corrupted if power failure etc happens during writing to disks). Writing to doublewrite buffer is sequential so highly optimized for HDD. Sequential read will happen on recovery. MySQL has a parameter "skip_innodb_doublewrite" to disable doublewrite buffer, but disabling is dangerous. The amount of write data to doublewrite buffer is equivalent to that to data areas, so this is not negligible.

Insert buffer is also a special feature for InnoDB. If non-unique, secondary index blocks are not in memory, InnoDB inserts entries to a "insert buffer" to avoid random disk i/o operations. Periodically, the insert buffer is merged into the secondary index trees in the database. Insert buffer enables to reduce the number of disk i/o operations by merging i/o requests to the same block, and random i/o operations can be sequential. So insert buffer is also highly optimized for HDD. Both sequential writes and reads will happen in normal operations.

UNDO segments are random i/o oriented. To guarantee MVCC, innodb needs to register old images in UNDO segments. Reading previous images from UNDO segments on disk requires random reads. If you run a very long transaction with repeatable read (i.e. mysqldump --single-transaction) or running a long query, a lot of random reads might happen, so storing UNDO segments on SSD would be better in that case. If you run only short transactions/queries, this will not be an issue.

Based on the above, I want to use "innodb_file_per_table", then storing *.ibd files on SSD, storing ib_logfile* (REDO log files), binary logs, ibdata (SYSTEM-tablespace) on HDD.

2 BenchmarkingI ran DBT-2(disk i/o intensive) to verify whether my assumption was correct or not.

2.1 Benchmarking environmentSun Fire X4150

CPU: Intel Xeon 3.3GHz, quad core, 8 cores in total

HDD: SAS 15,000RPM, 2 disks, RAID 1, write cache enabled on H/W raid controller

SSD: Intel X25-E, Single drive, write cache enabled on drive

(Note that this is dangerous and not recommended for production at all. I didn't have a H/W raid controller working well on Intel X25-E so I just turned on for benchmarking purpose only)

OS: RedHat Enterprise Linux 5.3 (2.6.18-128.el5)

Filesystem: ext3

I/O Scheduler: noop

MySQL version: 5.4.0

DBT-2 option:

./run_workload.sh -n -s 100 -t 1 -w 100 -d 600 -c 20

(Approximate datafile size is 9.5 GB)

my.cnf:

[mysqld]

basedir=/root/mysql5400

datadir=/ssd/mysql-data

innodb_data_home_dir=

innodb_data_file_path=/hdd1/ibdata1:500M:autoextend

innodb_file_per_table

innodb_log_group_home_dir=/hdd/log

innodb_log_files_in_group=2

innodb_log_file_size=512M

innodb_flush_log_at_trx_commit=1

innodb_buffer_pool_size=2G

innodb_flush_method=O_DIRECT

innodb_support_xa=0

(Binlog is disabled)

This is a disk-bound configuration. If you increase innodb_buffer_pool_size higher, you can get better results but I intentionally set lower to do disk i/o intensive loads.

2.2 Benchmarking results2.2.1 HDD vs SSD All files on HDD(two drives, RAID1): 3447.25

All files on SSD(single drive) : 14842.44

(The number is NOTPM, higher is better)

I got 4.3 times better result on SSD. As

Vadim,

Matt, and other many people have already shown, just replacing HDD with SSD works very well for DBT-2 benchmarking.

The following is iostat result.

HDD 3447.25 NOTPM

Device: rrqm/s wrqm/s r/s w/s rMB/s

sdf1 4.60 80.03 363.48 377.30 6.52

wMB/s avgrq-sz avgqu-sz await svctm %util

10.14 46.06 17.89 24.18 1.35 99.98

-----cpu-----

us sy id wa st

3 1 50 46 0

SSD 14842.44 NOTPM

Device: rrqm/s wrqm/s r/s w/s rMB/s

sda1 0.00 11.90 1738.65 1812.33 30.73

wMB/s avgrq-sz avgqu-sz await svctm %util

46.42 44.49 4.01 1.13 0.27 95.03

-----cpu-----

us sy id wa st

18 5 57 20 0

2.2.2 Storing some files on SSD, others on HDD All files on SSD : 14842.44

Redo log on HDD: 15539.8

Redo log and ibdata on HDD: 23358.63

Redo log and ibdata on tmpfs: 24076.43

Redo log and ibdata on separate SSD drives: 20450.78

These are quite interesting results. Let's see one by one.

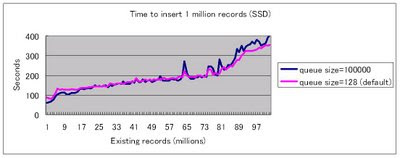

2.2.2.1 Redo log file on HDD Storing just redo log files on HDD did not have so good effect (just 4.7% improvement). DBT-2 internally executes 14.5 DML(insert/update/delete) statements per transaction on average, so commit frequency is less than typical web applications. If you execute commit more frequently, performance difference will be bigger.The following is a statistics about how many bytes were written during single DBT-2 benchmarking. You can get this information by iostat -m.

MB written on SSD: 31658

MB written on HDD: 1928

So 31658MB were written to SSD but only 1928MB were written to HDD. 20 times difference is huge so just moving redo logs from SSD to HDD would not have big impact. Note that this statistics highly depends on applications. For DBT-2, this doesn't work anyway.

2.2.2.2 Redo log and ibdata on HDDBy storing redo log and ibdata on HDD, I got much better result (14842.44->23358.63, 57% improvement!). Dirty pages are written twice (one for doublewrite buffer, the other for actual data area) so by moving ibdata, in which doublewrite buffer is allocated, to different drives, you can decrease the amount of writes to SSD by half. Since doublewrite buffer is sequentially written, it fits for HDD very well. Here is a result of iostat -m.

MB written on SSD : 23151

MB written on HDD : 25761

iostat & vmstat results are as follows. Apparently HDD was not so busy so working very well for sequential writes.

SSD-HDD 23358.63NOTPM

Device: rrqm/s wrqm/s r/s w/s rMB/s

sda1(SATA SSD) 0.03 0.00 2807.15 1909.00 49.48

sdf1(SAS HDD) 0.00 547.08 0.38 737.22 0.01

wMB/s avgrq-sz avgqu-sz await svctm %util

35.91 37.08 2.90 0.61 0.18 84.69

40.03 111.17 0.13 0.18 0.11 7.79

-----cpu-----

us sy id wa st

28 9 49 14 0

2.2.2.3 Redo log and ibdata on tmpfsI was interested in whether storing redo log and ibdata on HDD can get the "best" performance or not. To verify this, I tested to store these files on tmpfs. This is not useful in production environment at all, but it's fine to check performance higher limit. tmpfs should be faster than any other high-end SSD(including PCIe SSD). If there is not so big performance difference, moving these files from HDD to very fast SSD is not needed. Here is a result.

Redo log and ibdata on HDD(NOTPM): 23358.63

Redo log and ibdata on tmpfs(NOTPM): 24076.43

I got only 3% performance improvement. Actually this is not suprise because HDD was not maxed out when testing "redo log and ibdata on HDD".

2.2.2.4 Redo log and ibdata on separate SSD driveSome people might be interested in how performance is different if using two SSD drives, one for *ibd, the other for Redo/ibdata. The following is a result.

Redo log and ibdata on HDD(NOTPM): 23358.63

Redo log and ibdata on separate SSD drives(NOTPM): 20450.78

So I got 12.5% worse result compared to HDD. Does this mean Intel X25-E is worse for sequential writes than SAS HDD? Actually the situation seemed not so simple. When I did a very simple sequential write oriented benchmarking (mysqlslap insert) on single drive, I got very close numbers compared to HDD. So there must be other reasons for performance drop. The following is iostat result.

SSD-SSD 20450.78NOTPM

Device: rrqm/s wrqm/s r/s w/s rMB/s

sda1(SATA SSD) 0.00 0.00 2430.32 1657.15 43.32

sdb1(SATA SSD) 0.00 12.60 0.75 1017.43 0.01

wMB/s avgrq-sz avgqu-sz await svctm %util

31.06 37.27 2.29 0.56 0.16 67.28

34.46 69.33 0.57 0.56 0.27 27.02

SSD-HDD 23358.63NOTPM

Device: rrqm/s wrqm/s r/s w/s rMB/s

sda1(SATA SSD) 0.03 0.00 2807.15 1909.00 49.48

sdf1(SAS HDD) 0.00 547.08 0.38 737.22 0.01

wMB/s avgrq-sz avgqu-sz await svctm %util

35.91 37.08 2.90 0.61 0.18 84.69

40.03 111.17 0.13 0.18 0.11 7.79

sda activity on SSD-SSD test was about 15% lower than on SSD-HDD test even though sdb was not fully busy. I have not identified the reason yet,but currently I assume that SATA interface (Host Bus Adapter port) got a bit saturated. I'll try other H/W components (SATA HDD, using different HBAs which has many more ports, etc) and see what will happen in the near future.

Anyway, any high end SSD won't beat tmpfs numbers(24076.43NOTPM) so HDD(23358.63NOTPM) would be enough.

3 Conclusion Now I'm pretty confident that for many InnoDB based applications it would be a best practice to store *.ibd files on SSD, and store Redo log files, binary log files, and ibdata (SYSTEM-tablespace) on HDD. I intentionally disabled binary logs for these benchmarking because currently binlog has concurrency issues (breaking InnoDB group-commit). Hopefully this issue will be fixed soon in future 5.4 so I'll be able to show you detailed results at that time. In theory same principles as redo logs(storing on HDD) can be applied.

I have done many other benchmarks (disabling doublewrite buffer, running with AUTOCOMMIT, UNDO log performance difference between HDD and SSD, etc) so I'd like to share some interesting results in other posts.

A very interesting point I have seen is that "The amount of disk reads/writes" really matters on SSD, which does not matter on HDD. This is because disk seeks & rotation overhead is very small on SSD, so data transfer speed relatively got important. For example, doublewrite buffer really influenced performance on SSD, which did not have impact on HDD. This point might force architecture changes for RDBMS. From this perspective, I am highly impressed by PBXT. I'd like to do intensive tests on PBXT in the near future.